Comprehensive Overview of Depth Cameras

What Is a Depth Camera?

There are different types of 3D sensors, that can differ between different methods to acquire world data or how that data is processed in order to represent it in a convenient way. These sensors can vary in many ways such as acquisition method, resolution, range, etc. This page entails a brief overview of the different 3D sensor types and a small survey of what sensors are currently available.

João Alves, Aivero

Last updated: 20.06.2023

Attribute Filter

- Medium Resolution(11)

- High Resolution(12)

- Ultra-High Resolution(8)

- Special Resolution(0)

- Low (5mm+)(4)

- Medium (1-5mm)(13)

- High (0.1-1mm)(9)

- Close Range (up to 2m)(9)

- Short Range (2.1 to 5m)(18)

- Medium Range (5.1 to 10m)(18)

- Long Range (10.1 to 15m)(0)

- Extended Range (more than 15m)(5)

- Basic (Up to 20 fps)(8)

- Standard (20 - 30 fps)(20)

- Enhanced (30 - 60 fps)(6)

- High (60 - 100 fps)(11)

- Ultra (Above 100 fps)(3)

- USB 2.0 Interfaces(7)

- USB 3.0 and 3.1 Interfaces(20)

- Ethernet-Based Interfaces(20)

- Mixed and Advanced Interfaces(3)

(Missing a camera? Let us know using this form)

Depth Camera Overview

What are depth cameras used for?

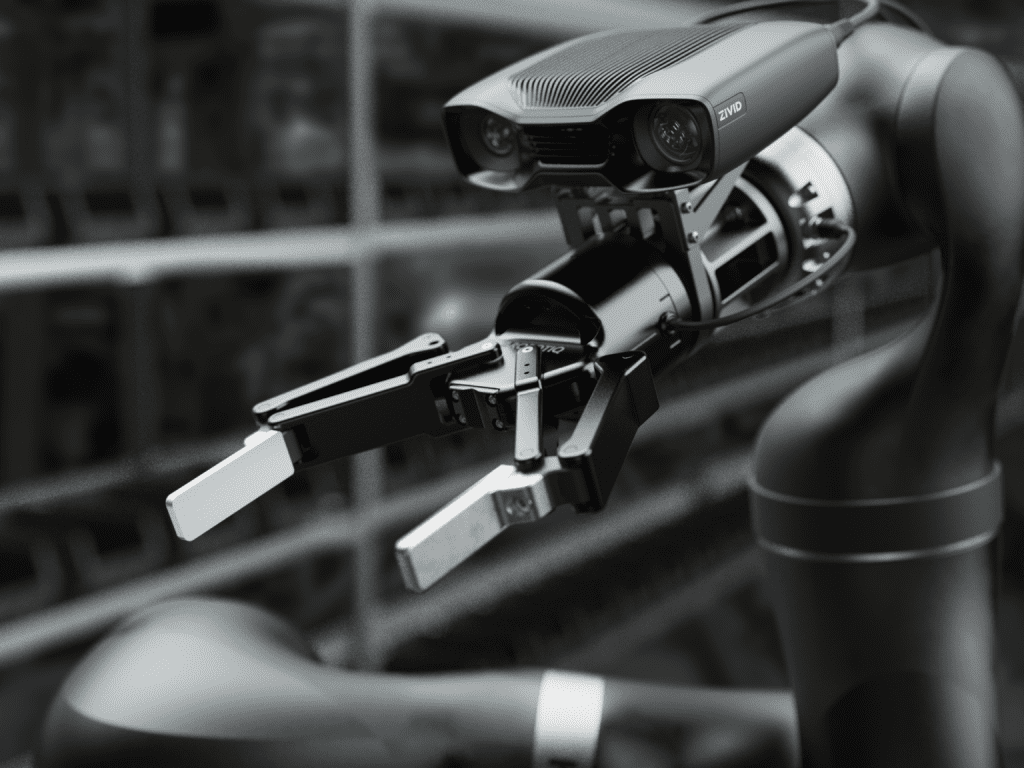

Depth-sensing cameras enable machines to perceive the environment in three dimensions. Because of the richer information the depth cameras provide, they have gained importance in applications such as vision-guided robotics, inspection and monitoring. 3D data is less susceptible to environmental disturbances such as changing light conditions. Thus, machines become more reliable and precise when performing tasks, such as picking up an object and placing it elsewhere.

Depth Camera Sensor Types

Depth Cameras typically detect depth using Stereo Sensors, Time-of-Flight calculations, Structured Light, or LiDAR.

Stereo sensors

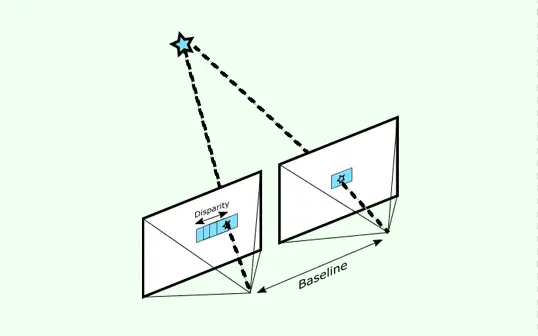

Stereo sensors try to mimic human vision by using two cameras facing the scene with some distance between them (baseline, see Figure 1). The images from these cameras are acquired and then used to perform visual feature (relevant visual information) extraction and matching to obtain a disparity map between the cameras’ views.

The disparity information is inversely proportional to depth and can easily be used to obtain the depth map. This also means that some sensors of this type usually only work in feature-rich environments and encounter problems in featureless environments (e.g. a white wall). However, this type of sensor usually allows for greater depth image resolutions than other cameras.

These feature detection and matching computations are done onboard the camera, meaning they usually require more processing power than other methods. Also, the stereo working distance is limited by the baseline between the cameras as the error increases quadratically with objects’ distance to the sensor. Usual applications entail both indoor and outdoor, feature-rich environments.

Time-of-Flight

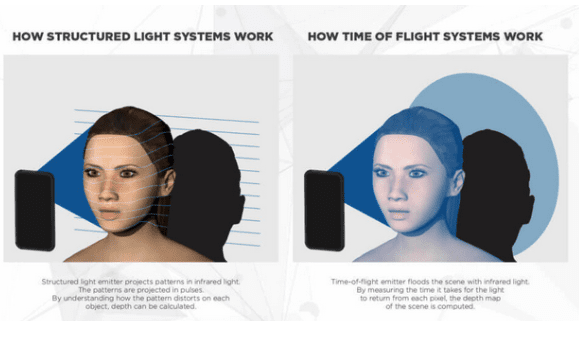

Time of Flight (ToF) sensors flood the scene with light and calculate depth using each photon’s time to return to the sensor. This means that each pixel relates to one beam of light projected by the device, which provides more data density, narrower shadows behind objects and more straightforward calibration (no stereo matching).

Since they use light projection, these sensors are sensitive to different types of surfaces, such as very reflective or very dark ones. In these cases, invalid data usually appears in the image. However, they are much more robust to low lighting or dim conditions than stereo sensors which depend on the scene lighting. These sensors can be used both indoors and outdoors.

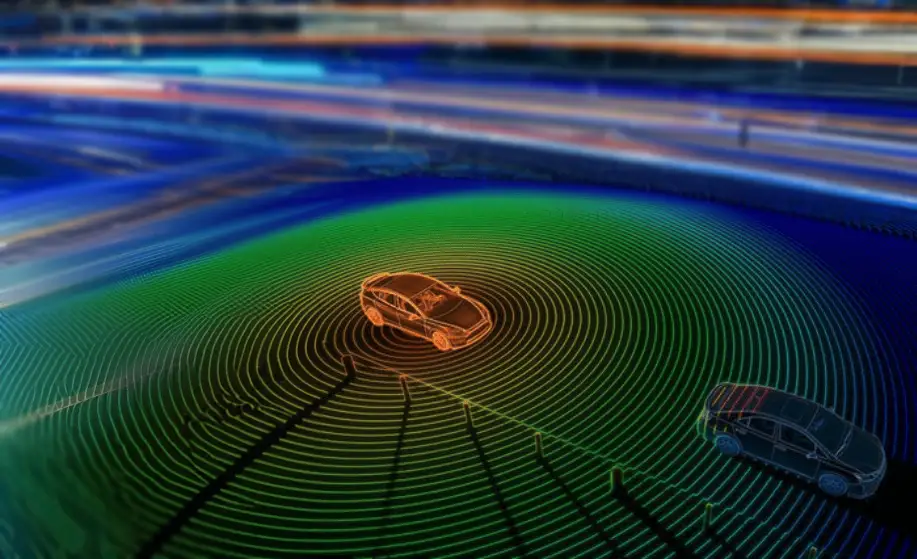

Light Detection Ranging

Similar to ToF but with scanning, light detection and ranging (LiDAR) sensors are more accurate than ToF since they use multiple IR pulses, whereas ToF sensors usually use one light pulse to obtain their image. By mapping the environment point-per-point instead of a burst of points (as in ToF), the overall noise for each point is reduced, producing more accurate results. Furthermore, LiDAR can usually obtain measurements at a greater distance than traditional ToF or Stereo sensors.

However, LiDAR sensors are usually more expensive than other 3D sensors and, since they perform scanning, can be affected by highly dynamic environments (motion blur).

Structured Light

Structured light (SL) sensors, on the other hand, use a known pattern projected by the IF sensor onto the scene. The way the pattern deforms is used to construct the depth map.

This type of sensor does not require an external light source and is mainly used in indoor environments due to its low robustness to sunlight since interference may occur with the projected light pattern.

Curious About Depth Video Compression Techniques? Read more about it in our whitepaper